Page 2 :

Prof. Tirup Parmar, , Unit, , Details, , I, , Introduction:, What is an operating system? History of operating system, computer hardware,, different operating systems, operating system concepts, system calls, operating system, structure., Processes and Threads:, Processes, threads, interprocess communication, scheduling, IPC problems., , II, , Memory Management:, No memory abstraction, memory abstraction: address spaces, virtual memory, page, replacement algorithms, design issues for paging systems, implementation issues,, segmentation., File Systems:, Files, directories, file system implementation, file-system management and, optimization, MS-DOS file system, UNIX V7 file system, CD ROM file system., , III, , Input-Output:, Principles of I/O hardware, Principles of I/O software, I/O software layers, disks,, clocks, user interfaces: keyboard, mouse, monitor, thin clients, power management,, Deadlocks:, Resources, introduction to deadlocks, the ostrich algorithm, deadlock detection and, recovery, deadlock avoidance, deadlock prevention, issues., , IV, , Virtualization and Cloud:, History, requirements for virtualization, type 1 and 2 hypervisors, techniques for, efficient virtualization, hypervisor microkernels, memory virtualization, I/O, virtualization, Virtual appliances, virtual machines on multicore CPUs, Clouds., Multiple Processor Systems, Multiprocessors, multicomputers, distributed systems., , V, , Case Study on LINUX and ANDROID:, History of Unix and Linux, Linux Overview, Processes in Linux, Memory, management in Linux, I/O in Linux, Linux file system, security in Linux. Android, Case Study on Windows:, History of windows through Windows 10, programming windows, system structure,, processes and threads in windows, memory management, caching in windows, I/O in, windows, Windows NT file system, Windows power management, Security in, windows., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 2

Page 3 :

Prof. Tirup Parmar, , UNIT I, Chapter 1 Introduction to Operating System, Q-1) WHAT IS AN OPERATING SYSTEM? And two essentially unrelated, functions of OS, Computers are equipped with a layer of, software called the operating system, whose, job is to provide user programs with a better,, simpler, cleaner, model of the computer and to, handle managing all the resources., There are many operating system such as, Windows, Linux, FreeBSD, or OS X, but, appearances can be deceiving. The program, that users interact with, usually called the shell, when it is text based and the GUI (Graphical, User Interface)., , The Operating System as an Extended Machine:, , , , , , , The architecture (instruction set, memory organization, I/O, and bus structure) of most, computers at the machine-language level is primitive and awkward to program, especially, for input/output., To make this point more concrete, consider modern SATA (Serial ATA) hard disks used, on most computers., No programmer would want to deal with this disk at the hardware level. Instead, a piece, of software, called a disk driver, deals with the hardware and provides an interface to, read and write disk blocks, operating systems contain many drivers for controlling I/O, devices., But even this level is much too low for most applications. For this reason, all operating, systems provide yet another layer of abstraction for using disks: files. Using this, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 3

Page 4 :

Prof. Tirup Parmar, , , , , , , abstraction, programs can create, write, and read files, without having to deal with the, messy details of how the hardware actually works., One abstraction that almost every computer user understands is the file, as mentioned, above. It is a useful piece of information, such as a digital photo, saved email message,, song, or Web page. It is much easier to deal with photos, emails, songs, and Web pages, than with the details of SATA (or other) disks., The job of the operating system is to create good abstractions., One of the major tasks of the operating system is to hide the hardware and present, programs (and their programmers) with nice, clean, elegant, consistent, abstractions to, work., , The Operating System as a Resource Manager:, Resource management includes multiplexing (sharing) resources in two different ways:, in time and in space., When a resource is time multiplexed, different programs or users take turns using it., First one of them gets to use the resource, then another, and so on., For example, with only one CPU and multiple programs that want to run on it, the, operating system first allocates the CPU to one program, then, after it has run long, enough, another program gets to use the CPU, then another, and then eventually the first, one again., Determining how the resource is time multiplexed—who goes next and for how long—is, the task of the operating system., Another example of time multiplexing is sharing the printer. When multiple print jobs are, queued up for printing on a single printer, a decision has to be made about which one is, to be printed next., Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 4

Page 5 :

Prof. Tirup Parmar, The other kind of multiplexing is space multiplexing., For example, main memory is normally divided up among several running programs, so, each one can be resident at the same time (for example, in order to take turns using the, CPU)., , HISTORY OF OPERATING SYSTEMS, Q-2) Explain third generation OS. (Nov. 2016), Since operating systems have historically been closely tied to the architecture of the computers on which, they run, we will look at successive generations of computers to see what their operating systems were, like., The Second, The First, The Third, The Fourth, The Fifth, Generation (1955– Generation, Generation, Generation, Generation, 65):, Transistors, (1945–55):, (1965–1980): ICs (1980–Present):, (1990–Present):, and, Batch, Vacuum Tubes, and, Personal, Mobile, Systems, , In early days, a, single group of, people (usually, engineers), designed, built,, programmed,, operated, and, maintained each, machine., , The introduction of, the transistor in the, mid-1950s changed, the picture, radically., , Multiprogrammi, ng, , Computers, , Computers, , The IBM 360 was, the first major, computer line to use, (small-scale), ICs(Integrated, , With the, development of LSI, (Large Scale, Integration), circuits—chips, containing, thousands of, transistors on a, square centimeter of, silicon-the age of, the, , the idea of, combining telephony, and computing in a, phone-like device, Nokia released the, N9000, which, literally combined, two,, , Circuits), thus, providing a major, price/performance, advantage over the, second-generation, machines, which, were built up from, individual, transistors., , All programming, was, done in absolute, machine, language, , Computers became, reliable enough that, they could be, manufactured and, sold to paying, customers with the, expectation that, they would, , Now adays they are, often used for, managing huge, databases (e.g., for, airline reservation, systems) or as, servers for World, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , personal computer, dawned called, microcomputers., user friendly,, meaning that it was, intended for users, who not only knew, nothing about, computers but, furthermore had, absolutely no, , mostly separate, devices: a phone and, a PDA (Personal, Digital Assistant)., , Now that, smartphones have, become ubiquitous,, Google’s Android is, the dominant, operating system, , Page 5

Page 6 :

Prof. Tirup Parmar, continue to, function long, enough to get some, useful work done., , Wide Web sites that, must process, thousands of, requests per second., , intention whatsoever, of learning., , with Apple’s iOS a, clear second, , Programming, languages were, unknown (even, assembly, language, , These machines,, now called, mainframes, were, locked away in, large, specially, , Multiprogramming, :- partition memory, into several pieces,, with a, , most smartphones in, the first decade after, their inception were, running, , was unknown)., , air-conditioned, computer rooms,, with staffs of, professional, operators to run, them., , Mac OS X is a, UNIX-based, operating system, very widely used --Microsoft decided to, build a MS-DOS it, was strongly, , Operating, systems were, unheard of., , Only large, corporations or, major government, agencies or, universities could, afford the, multimillion-dollar, price tag., , different job in each, partition. While one, job was waiting for, I/O to complete,, another job could be, using the CPU., , whenever a running, job finished, the, operating system, could, load a new job from, the disk into the, now-empty partition, and run it. This, technique, is called spooling, (from Simultaneous, Peripheral, Operation On, Line) and, was also used for, output., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , influenced by the, success of the, Macintosh., , Development of, network operating, systems, and distributed, operating systems, In a network, operating system,, the users are aware, of the existence of, multiple computers, , Symbian OS like, Samsung, Sony, Ericsson, Motorola,, and especially, Nokia., , other operating, systems like RIM’s, Blackberry OS, (introduced for, smartphones in, 2002) and, Apple’s iOS, (released for the first, iPhone in 2007), , and can log in to, remote machines, and copy files from, one machine to, another., , Page 6

Page 7 :

Prof. Tirup Parmar, The usual mode, of operation, was for the, programmer to, sign up for a, block of time, using the signup, sheet on the wall,, then come down, to the machine, room, insert his, or her plugboard, into the, , To run a job (i.e., a, program or set of, programs),, a programmer, would first write, the program on, paper (in, FORTRAN or, assembler),, then punch it on, cards., , computer, and, spend the next, few hours hoping, that none of the, 20,000 or so, vacuum tubes, would burn out, during the run., all the problems, were simple, mathematical and, numerical, calculations, , timesharing, a, variant, of, multiprogramming,, in which each user, has an online, terminal. In a, timesharing, system, if 20 users, are logged in and 17, of them are thinking, or talking or, drinking, coffee, the CPU can, be allocated in turn, to the three jobs that, want service., , Given the high cost, of the equipmentThe solution batch, system., , The idea behind it, was to collect a tray, full of jobs in the, input room and, then read them onto, a magnetic tape, using a small, (relatively), inexpensive, computer,, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , A distributed, operating system, in, contrast, is one that, appears to its users, as a traditional, uniprocessor, system, even though, it is actually, composed of, multiple processors., The users should not, be aware of where, their programs are, being run or where, their files are, located; that should, all be handled, automatically and, efficiently by the, operating system., , In 2011, Nokia, ditched Symbian and, announced it would, focus on Windows, , Distributed systems,, for example, often, allow applications to, run on several, processors at the, same time, , For phone, manufacturers,, Android had the, advantage that it was, open source and, available under a, permissive license, , Phone as its primary, platform. Android, a, Linux-based, operating system, released by Google, in, 2008, to overtake all, its rivals., , it has a huge, community of, developers writing, apps, mostly in the, familiar Java, programming, language., , Page 7

Page 8 :

Prof. Tirup Parmar, Q-3) Explain types of Computer memory., Memory is major part of computers that categories into several types. Memory is best storage, part to the computer users to save information, programs and etc, The computer memory offer, several kinds of storage media some of them can store data temporarily and some them can store, permanently. Memory consists of instructions and the data saved into computer through Central, Processing Unit (CPU)., , Types of Computer Memory:, Memory is the best essential element of a computer because computer can’t perform simple, tasks. The performance of computer mainly based on memory and CPU. Memory is internal, storage media of computer that has several names such as majorly categorized into two types,, Main memory and Secondary memory., 1. Primary Memory / Volatile Memory., 2. Secondary Memory / Non Volatile Memory., 1. Primary Memory / Volatile Memory:, Primary Memory also called as volatile memory because the memory can’t store the data, permanently. Primary memory select any part of memory when user want to save the data in, memory but that may not be store permanently on that location. It also has another name i.e., RAM., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 8

Page 9 :

Prof. Tirup Parmar, Random Access Memory (RAM):, The primary storage is referred to as random access memory (RAM) due to the random selection, of memory locations. It performs both read and write operations on memory. If power failures, happened in systems during memory access then you will lose your data permanently. So, RAM, is volatile memory. RAM categorized into following types., Dynamic random-access memory (DRAM) is a type of random-access memory that stores, each bit of data in a separate capacitor within an integrated circuit. The capacitor can be either, charged or discharged; these two states are taken to represent the two values of a bit,, conventionally called 0 and 1., SRAM (static RAM) is random access memory (RAM) that retains data bits in its memory as, long as power is being supplied. Unlike dynamic RAM (DRAM), which stores bits in cells, consisting of a capacitor and a transistor, SRAM does not have to be periodically refreshed., DRDRAM (Rambus Dynamic Random Access Memory) is a memory subsystem that promises, to transfer up to 1.6 billion bytes per second. The subsystem consists of the random, access memory (RAM), the RAM controller, and the bus (path) connecting RAM to the, microprocessor and devices in the computer that use it., 2. Secondary Memory / Non Volatile Memory:, Secondary memory is external and permanent memory that is useful to store the external storage, media such as floppy disk, magnetic disks, magnetic tapes and etc cache devices. Secondary, memory deals with following types of components., Read Only Memory (ROM) :, ROM is permanent memory location that offer huge types of standards to save data. But it work, with read only operation. No data lose happen whenever power failure occur during the ROM, memory work in computers., ROM memory has several models such names are following., 1. PROM: Programmable Read Only Memory (PROM) maintains large storage media but, can’t offer the erase features in ROM. This type of RO maintains PROM chips to write, data once and read many. The programs or instructions designed in PROM can’t be, erased by other programs., 2. EPROM : Erasable Programmable Read Only Memory designed for recover the, problems of PROM and ROM. Users can delete the data of EPROM thorough pass on, ultraviolet light and it erases chip is reprogrammed., Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 9

Page 10 :

Prof. Tirup Parmar, 3. EEPROM: Electrically Erasable Programmable Read Only Memory similar to the, EPROM but it uses electrical beam for erase the data of ROM., , Cache Memory: Mina memory less than the access time of CPU so, the performance will, decrease through less access time. Speed mismatch will decrease through maintain cache, memory. Main memory can store huge amount of data but the cache memory normally kept, small and low expensive cost. All types of external media like Magnetic disks, Magnetic drives, and etc store in cache memory to provide quick access tools to the users., , Q-4) Briefly explain Monolithic architecture of operating system., Monolithic Systems :, , , , , , , , , , , , To construct the actual object program of the operating system when this approach is, used, one first compiles all the individual procedures (or the files containing the, procedures) and then binds them all together into a single executable file using the system, linker., In terms of information hiding, there is essentially none—every procedure is visible to, every other procedure (as opposed to a structure containing modules or packages, in, which much of the information is hidden away inside modules, and only the officially, designated entry points can be called from outside the module)., Even in monolithic systems, however, it is possible to have some structure., The services (system calls) provided by the operating system are requested by putting the, parameters in a well-defined place (e.g., on the stack) and then executing a trap, instruction., This instruction switches the machine from user mode to kernel mode and transfers, control to the operating system., The operating system then fetches the parameters and determines which system call is to, be carried out., After that, it indexes into a table that contains in slot k a pointer to the procedure that, carries out system call k., This organization suggests a basic structure for the operating system:, 1. A main program that invokes the requested service procedure., 2. A set of service procedures that carry out the system calls., 3. A set of utility procedures that help the service procedures., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 10

Page 11 :

Prof. Tirup Parmar, , , , , , This division of the procedures into three layers is shown in Fig., The core operating system that is loaded when the computer is booted, many operating, systems support loadable extensions, such as I/O device drivers and file systems., These components are loaded on demand. In UNIX they are called shared libraries. In, Windows they are called DLLs (Dynamic-Link Libraries). They have file extension .dll, and the C:\Windows\system32 directory on Windows systems has well over 1000 of, them., , Q-5) What is system calls? Explain its types., OR, List and explain system calls for process management.(Nov. 2016), SYSTEM CALLS, 1. System Calls for Process Management, 2. System Calls for File Management, 3. System Calls for Directory Management, 4. Miscellaneous System Calls, 5. The Windows Win32 API, System Calls for Process Management:, , , , , , The first group of calls in Fig. deals with process management., Fork is a good place to start the discussion., Fork is the only way to create a new process in POSIX., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 11

Page 12 :

Prof. Tirup Parmar, , , , , , , It creates an exact duplicate of the original process, including all the file descriptors,, registers—everything., After the fork, the original process and the copy (the parent and child) go their separate, ways., All the variables have identical values at the time of the fork, but since the parent’s data, are copied to create the child, subsequent changes in one of them do not affect the other, one., (The program text, which is unchangeable, is shared between parent and child.) The fork, call returns a value, which is zero in the child and equal to the child’s PID (Process, IDentifier) in the parent., Using the returned PID, the two processes can see which one is the parent process and, which one is the child process., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 12

Page 13 :

Prof. Tirup Parmar, System Calls for File Management:, , , , , To read or write a file, it must first be opened., , This call specifies the file name to be opened, either, as an absolute path name or relative to the working, directory, as well as a code of O RDONLY, O WRONLY, or, O RDWR, meaning open for reading, writing, or both. To, create a new file, the O CREAT parameter is used., , The file descriptor returned can then be used for, reading or writing. Afterward, the file can be closed by, close, which makes the file descriptor available for reuse on, a subsequent open., , Although most programs read and write files, sequentially, for some applications programs need to be able to access any part of a file at, random. Associated with each file is a pointer that indicates the current position in the, file. When reading (writing) sequentially, it normally points to the next byte to be read, (written)., The lseek call changes the value of the position pointer, so that subsequent calls to read, or write can begin anywhere in the file., , System Calls for Directory Management:, , , , , , In this section we will look at some system calls that relate more to directories or the file, system as a whole, rather than just to one specific file as in the previous section., The first two calls, mkdir and rmdir, create and remove empty directories, respectively., The next call is link. Its purpose is to allow the same file to appear under two or more, names, often in different directories., A typical use is to allow several members of the same programming team to share a, common file, with each of them having the file appear in his own directory, possibly, under different names., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 13

Page 14 :

Prof. Tirup Parmar, , , , Sharing a file is not the same as giving every team member a private copy; having a, shared file means that changes that any member of the team makes are instantly visible to, the other members—there is only one file., When copies are made of a file, subsequent changes made to one copy do not affect the, others., , Miscellaneous System Calls:, A variety of other system calls exist as well. We will look at just four of them here. The, chdir call changes the current working directory., After the call, chdir("/usr/ast/test");, an open on the file xyz will open /usr/ast/test/xyz., The concept of a working directory eliminates the need for typing (long) absolute path, names all the time., , The Windows Win32 API:, Windows also has system calls. With UNIX, there is almost a oneto- one relationship, between the system calls (e.g., read) and the library procedures (e.g., read) used to invoke, the system calls., With Windows, the situation is radically different. To start with, the library calls and the, actual system calls are highly decoupled. Microsoft has defined a set of procedures called, the Win32 API (Application Programming Interface) that programmers are expected, to use to get operating system services., , Q-6) Write a note on layered Architecture of OS., Layered Systems:, , Layer 1 did the, memory management. It, allocated space for processes, in main memory and on a, 512K word drum used for, holding parts of processes, (pages) for which there was, no room in main memory., Above layer 1, processes did, not have to worry about, whether they were in memory, or on the drum; the layer 1 software took care of making sure pages were brought into, memory at the moment they were needed and removed when they were not needed., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 14

Page 15 :

Prof. Tirup Parmar, , , , , , Layer 2 handled communication between each process and the operator console (that is,, the user). On top of this layer each process effectively had its own operator console., Layer 3 took care of managing the I/O devices and buffering the information streams to, and from them. Above layer 3 each process could deal with abstract I/O devices with nice, properties, instead of real devices with many peculiarities., Layer 4 was where the user programs were found. They did not have to worry about, process, memory, console, or I/O management., The system operator process was located in layer 5., , Q-7) Write a note on Microkernel systems. (March 2017), , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 15

Page 16 :

Prof. Tirup Parmar, , Q-8) Explain Client-Server architecture of OS. (Oct 2016), , Client-Server Model:, , , , , , , , , , A slight variation of the microkernel idea is to distinguish two classes of processes, the, servers, each of which provides some service, and the clients, which use these services., This model is known as the client-server model., Often the lowest layer is a microkernel, but that is not required. The essence is the, presence of client processes and server processes., Communication between clients and servers is often by message passing., To obtain a service, a client process constructs a message saying what it wants and sends, it to the appropriate service., The service then does the work and sends back the answer., If the client and server happen to run on the same machine, certain optimizations are, possible, but conceptually, we are still talking about message passing here., , Q-9) What is difference between timesharing and multiprogramming, systems?, Time-sharing Operating Systems:, , , Time-sharing is a technique which enables many people, located at various terminals, to, use a particular computer system at the same time., Time-sharing or multitasking is a logical extension of multiprogramming., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 16

Page 17 :

Prof. Tirup Parmar, , , , , , , , , , , Processor's time which is shared among multiple users simultaneously is termed as timesharing., The main difference between Multiprogrammed Batch Systems and Time-Sharing, Systems is that in case of Multiprogrammed batch systems, the objective is to maximize, processor use, whereas in Time-Sharing Systems, the objective is to minimize response, time., Multiple jobs are executed by the CPU by switching between them, but the switches, occur so frequently. Thus, the user can receive an immediate response., For example, in a transaction processing, the processor executes each user program in a, short burst or quantum of computation., That is, if n users are present, then each user can get a time quantum., When the user submits the command, the response time is in few seconds at most., The operating system uses CPU scheduling and multiprogramming to provide each user, with a small portion of a time., Computer systems that were designed primarily as batch systems have been modified to, time-sharing systems., , Advantages of Timesharing operating systems are as follows:, Provides the advantage of quick response, Avoids duplication of software, Reduces CPU idle time, Disadvantages of Time-sharing operating systems are as follows:, Problem of reliability, Question of security and integrity of user programs and data, Problem of data communication, Distributed Operating System, , , , , , , , Distributed systems use multiple central processors to serve multiple real-time, applications and multiple users., Data processing jobs are distributed among the processors accordingly., The processors communicate with one another through various communication lines, (such as high-speed buses or telephone lines)., These are referred as loosely coupled systems or distributed systems., Processors in a distributed system may vary in size and function., These processors are referred as sites, nodes, computers, and so on., , The advantages of distributed systems are as follows:, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 17

Page 18 :

Prof. Tirup Parmar, With resource sharing facility, a user at one site may be able to use the resources, available at another., Speedup the exchange of data with one another via electronic mail., If one site fails in a distributed system, the remaining sites can potentially continue, operating., Better service to the customers., Reduction of the load on the host computer., Reduction of delays in data processing., , Q-10) What is the difference between kernel and user mode? Explain how, having two distinct modes aids in designing an OS., , Operating System's were designed with two different major operative mode:Kernel Mode, , , , In the kernel mode running codes can access to hardware directly and here we can see, any CPU instruction and reference can put any memory address., In this mode the codes run directly hardware with no restriction, it can be put and access, memory directly., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 18

Page 19 :

Prof. Tirup Parmar, , , , The Kernel mode is safety place of the operating system that hides important things from, the users who wants to damage the system, operating system seperate kernel programs, and user programs in the different modes., For instance, when we install a hardware driver(software) use kernel mode. In addition,, Kernel mode crashes will be hard to solve it is not recoverable., , User Mode, In the user mode running codes can not access to hardware directly(access thanks to, system calls) and can not put any referance to memory directly., We can say it is normal mode that we use the programs like internet explorer, media, player etc, in this mode., Most of the codes running, the computer works in this mode., It is not access directly any important place to Operating System so if there is a problem, in here, it can be recoverable, only that application crash, not entire system., They are enforced from CPU hardware and they are extremely important for operating system, safety, because of the user can not use kernel mode that includes critical data structures operates, machine, direct hardware and direct memory accessing, if there is any harm to system, the main, system will be halt. Thanks to this distinct the user can use the user mode and operating system, avoid to meet any harm. Because, the user can not access any important place., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 19

Page 20 :

Prof. Tirup Parmar, Q-11) What is a trap instruction? Explain its use in OS., To obtain services from the operating system, a user program must make a system call, which, traps into the kernel and invokes the operating system. The TRAP instruction switches from user, mode to kernel mode and starts the operating system. When the work has been completed,, control is returned to the user program at the instruction following the system call., If a process is running a user program in user mode and needs a system service, such as reading, data from a file, it has to execute a trap instruction to transfer control to the operating system., , System calls are performed in a series of steps. To make this concept clearer, let us, examine the read call discussed above. In preparation for calling the read library procedure,, which actually makes the read system call, the calling program first pushes the parameters onto, the stack, as shown in steps 1–3 in Fig., Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 20

Page 21 :

Prof. Tirup Parmar, C and C++ compilers push the parameters onto the stack in reverse order for historical, reasons (having to do with making the first parameter to printf, the format string, appear on top, of the stack). The first and third parameters are called by value, but the second parameter is, passed by reference, meaning that the address of the buffer (indicated by &) is passed, not the, contents of the buffer. Then comes the actual call to the library procedure (step 4). This, instruction is the normal procedure- call instruction used to call all procedures., The library procedure, possibly written in assembly language, typically puts the systemcall number in a place where the operating system expects it, such as a register (step 5)., Then it executes a TRAP instruction to switch from user mode to kernel mode and start, execution at a fixed address within the kernel (step 6)., The kernel code that starts following the TRAP examines the system-call number and, then dispatches to the correct system-call handler, usually via a table of pointers to system-call, handlers indexed on system-call number (step 7)., At that point the system-call handler runs (step 8)., Once it has completed its work, control may be returned to the user-space library, procedure at the instruction following the TRAP instruction (step 9)., This procedure then returns to the user program in the usual way procedure calls return, (step 10)., To finish the job, the user program has to clean up the stack, as it does after any, procedure call (step 11)., , Q-12) Brief about Virtual Memory Architecture of OS. Draw necessary, diagram. (April 2017), , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 21

Page 22 :

Prof. Tirup Parmar, , Q-13) Explain benefits and drawbacks of the exokernel OS, Conventional operating systems, always have an impact on the performance,, functionality and scope of applications that, are built on them because the OS is, positioned between the applications and the, physical hardware., The exokernel operating system, attempts to address this problem by, eliminating the notion that an operating, system must provide abstractions upon, which to build applications., The idea is to impose as few, abstractions as possible on the developers and to provide them with the liberty to use, abstractions as and when needed., The exokernel architecture is built such that a small kernel moves all hardware, abstractions into untrusted libraries known as library operating systems., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 22

Page 23 :

Prof. Tirup Parmar, The main goal of exokernel is to ensure that there is no forced abstraction, which is what, makes exokernel different from micro- and monolithic kernels., Some of the features of exokernel operating systems include:, , , , , , , Better support for application control, Separates security from management, Abstractions are moved securely to an untrusted library operating system, Provides a low-level interface, Library operating systems offer portability and compatibility, , The benefits of the exokernel operating system include:, , , , , , Improved performance of applications, More efficient use of hardware resources through precise resource allocation and, revocation, Easier development and testing of new operating systems, Each user-space applications is allowed to have to apply its own optimized memory, management, , Some of the drawbacks of the exokernel operating system include:, , , , Reduced consistency, Complex design of exokernel interfaces, , Q-14) Explain any two types of OS., Batch Operating System, The users of a batch operating system do not interact with the computer directly. Each user, prepares his job on an off-line device like punch cards and submits it to the computer operator., To speed up processing, jobs with similar needs are batched together and run as a group. The, programmers leave their programs with the operator and the operator then sorts the programs, with similar requirements into batches., The problems with Batch Systems are as follows:, , , , , Lack of interaction between the user and the job., CPU is often idle, because the speed of the mechanical I/O devices is slower than, the CPU., Difficult to provide the desired priority., , Time-sharing Operating Systems:- (ref. Q-9), Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 23

Page 24 :

Prof. Tirup Parmar, Distributed Operating System (ref. Q-9), Network Operating System, A Network Operating System runs on a server and provides the server the capability to, manage data, users, groups, security, applications, and other networking functions. The primary, purpose of the network operating system is to allow shared file and printer access among, multiple computers in a network, typically a local area network (LAN), a private network or to, other networks., Examples of network operating systems include Microsoft Windows Server 2003,, Microsoft Windows Server 2008, UNIX, Linux, Mac OS X, Novell NetWare, and BSD., The advantages of network operating systems are as follows:, Centralized servers are highly stable., Security is server managed., Upgrades to new technologies and hardware can be easily integrated into the system., Remote access to servers is possible from different locations and types of systems., The disadvantages of network operating systems are as follows:, High cost of buying and running a server., Dependency on a central location for most operations., Regular maintenance and updates are required., Real-Time Operating System, A real-time system is defined as a data processing system in which the time interval, required to process and respond to inputs is so small that it controls the environment. The time, taken by the system to respond to an input and display of required updated information is termed, as the response time. So in this method, the response time is very less as compared to online, processing., Real-time systems are used when there are rigid time requirements on the operation of a, processor or the flow of data and real-time systems can be used as a control device in a dedicated, application. A real-time operating system must have well-defined, fixed time constraints,, otherwise the system will fail. For example, Scientific experiments, medical imaging systems,, industrial control systems, weapon systems, robots, air traffic control systems, etc., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 24

Page 25 :

Prof. Tirup Parmar, Q-15) What are the primary differences between Network Operating System, and Distributed Operating System?, Network and Distributed Operating systems have a common hardware base, but the difference, lies in software., , Sr. No., , Network Operating System, , Distributed Operating System, , 1, , A network operating system is made up, of software and associated protocols, that allow a set of computer network to, be used together., , A distributed operating system is an, ordinary centralized operating, system but runs on multiple, independent CPUs., , 2, , Environment users are aware of, multiplicity of machines., , Environment users are not aware of, multiplicity of machines., , 3, , Control over file placement is done, manually by the user., , It can be done automatically by the, system itself., , 4, , Performance is badly affected if certain, part of the hardware starts, malfunctioning., , It is more reliable or fault tolerant i.e, distributed operating system, performs even if certain part of the, hardware starts malfunctioning., , 5, , Remote resources are accessed by either, logging into the desired remote machine, or transferring data from the remote, machine to user's own machines., , Users access remote resources in the, same manner as they access local, resources., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 25

Page 26 :

Prof. Tirup Parmar, Q-16) What are the differences between Batch processing system and Real, Time Processing System?, Following are the differences between Batch processing system and Real Time Processing, System., Sr. No., , Batch Processing System, , Realtime Processing System, , 1, , Jobs with similar requirements, are batched together and run, through the computer as a group., , In this system, events mostly external to, computer system are accepted and processed, within certain deadlines., , 2, , This system is particularly suited, for applications such as Payroll,, Forecasting, Statistical analysis, etc., , This processing system is particularly suited, for applications such as scientific, experiments, Flight control, few military, applications, Industrial control etc., , 3, , It provides most economical and, simplest processing method for, business applications., , Complex and costly processing requires, unique hardware and software to handle, complex operating system programs., , 4, , In this system data is collected, for defined period of time and is, processed in batches., , Supports random data input at random time., , 5, , In this system sorting is, performed before processing., , No sorting is required., , 6, , It is measurement oriented., , It is action or event oriented., , 7, , Transactions are batch processed, and periodically., , Transactions are processed as and when they, occur., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 26

Page 27 :

Prof. Tirup Parmar, 8, , In this processing there is no, time limit., , It has to handle a process within the, specified time limit otherwise the system, fails., , Q-17) What are the differences between Real Time System and Timesharing, System?, Following are the differences between Real Time system and Timesharing System., Sr. No., , Real Time System, , Timesharing System, , 1, , In this system, events mostly external, to computer system are accepted and, processed within certain deadlines., , In this system, many users are, allowed to simultaneously share the, computer resources., , 2, , Real time processing is mainly devoted, to one application., , Time sharing processing deals with, many different applications., , 3, , User can make inquiry only and cannot, write or modify programs., , Users can write and modify, programs., , 4, , User must get a response within the, specified time limit; otherwise it may, result in a disaster., , User should get a response within, fractions of seconds but if not, the, results are not disastrous., , 5, , No context switching takes place in this, system., , The CPU switches from one process, to another as a time slice expires or a, process terminates., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 27

Page 28 :

Prof. Tirup Parmar, Q-18) What are, multiprogramming?, , the, , differences, , between, , multiprocessing, , and, , Following are the differences between multiprocessing and multiprogramming., Sr. No., , Multiprocessing, , Multiprogramming, , 1, , Multiprocessing refers to, processing of multiple processes, at same time by multiple CPUs., , Multiprogramming keeps several programs, in main memory at the same time and, execute them concurrently utilizing single, CPU., , 2, , It utilizes multiple CPUs., , It utilizes single CPU., , 3, , It permits parallel processing., , Context switching takes place., , 4, , Less time taken to process the, jobs., , More Time taken to process the jobs., , 5, , It facilitates much efficient, utilization of devices of the, computer system., , Less efficient than multiprocessing., , 6, , Usually more expensive., , Such systems are less expensive., , Q-19) How Buffering can improve the performance of a Computer system?, If C.P.U and I/O devices are nearly same at speed, the buffering helps in making the C.P.U and, the I/O devices work at full speed in such a way that C.P.U and the I/O devices never sit idle at, any moment., Normally the C.P.U is much faster than an input device. In this case the C.P.U always faces an, empty input buffer and sits idle waiting for the input device which is to read a record into the, Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 28

Page 29 :

Prof. Tirup Parmar, buffer.For output, the C.P.U continues to work at full speed till the output buffer is full and then, it starts waiting., Thus buffering proves useful for those jobs that have a balance between computational work and, I/O operations. In other cases, buffering scheme may not work well., , Q-20) What inconveniences that a user can face while interacting with a, computer system, which is without an operating system?, , , , , , Operating system is a required component of the computer system., Without an operating system computer hardware is only an inactive electronic machine,, which is inconvenient to user for execution of programs., As the computer hardware or machine understands only the machine language. It is, difficult to develop each and every program in machine language in order to execute it., Thus without operating system execution of user program or to solve user problems is, extremely difficult., , Q-21) What are the advantages of multiprogramming?, Advantages of multiprogramming are −, 1. Increased CPU Utilization − Multiprogramming improves CPU utilization as it, organizes a number of jobs where CPU always has one to execute., 2. Increased Throughput − Throughput means total number of programs executed over a, fixed period of time. In multiprogramming, CPU does not wait for I/O for the program it, is executing, thus resulting in an increased throughput., 3. Shorter Turn around Time − Turnaround time for short jobs is improved greatly in, multiprogramming., 4. Improved Memory Utilization − In multiprogramming, more than one program resides, in main memory. Thus memory is optimally utilized., 5. Increased Resources Utilization − In multiprogramming, multiple programs are actively, competing for resources resulting in higher degree of resource utilization., 6. Multiple Users − Multiprogramming supports multiple users., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 29

Page 30 :

Prof. Tirup Parmar, Q-22) What are the advantages of Multiprocessing or Parallel System?, Multiprocessing operating system or the parallel system support the use of more than one, processor in close communication., The advantages of the multiprocessing system are:, 1. Increased Throughput − By increasing the number of processors, more work can be, completed in a unit time., 2. Cost Saving − Parallel system shares the memory, buses, peripherals etc. Multiprocessor, system thus saves money as compared to multiple single systems. Also, if a number of, programs are to operate on the same data, it is cheaper to store that data on one single disk, and shared by all processors instead of using many copies of the same data., 3. Increased Reliability − In this system, as the workload is distributed among several, processors which results in increased reliability. If one processor fails then its failure may, slightly slow down the speed of the system but system will work smoothly., , Q-23) Explain Disk., A disk consists of one, or more metal platters that, rotate at 5400, 7200, 10,800, RPM or more., Information is written, onto the disk in a series of, concentric circles., At any given arm, position, each of the heads, can read an annular region, called a track., Together, all the, tracks for a given arm, position form a cylinder., Each track is divided, into some number of sectors,, typically 512 bytes per sector., SSDs, (Solid State Disks):- do not have moving parts, do not contain platters in the, shape of disks, and store data in (Flash) memory. The only ways in, which they resemble disks is that they also store a lot of data which is not lost, when the power is off., virtual memory - run programs larger than physical memory by placing them on the disk, and using main memory as a kind of cache for the most heavily executed parts., Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 30

Page 31 :

Prof. Tirup Parmar, Q-24) Explain I/O Devices, , , , , , , , , I/O devices interact heavily with the operating system. Fig. I/O devices generally consist, of two parts: a controller and the device itself., The controller is a chip or a set of chips that physically controls the device. It accepts, commands from the operating system, for example, to read data from the device, and, carries them out., , Every controller has a small number of registers that are used to communicate with it., For example, a minimal disk controller might have registers for specifying the disk, address, memory address, sector count, and direction (read or write)., To activate the controller, the driver gets a command from the operating system, then, translates it into the appropriate values to write into the device registers., The collection of all the device registers forms the I/O port space., , Input and output can be done in three different ways:1. In the simplest method, a user program issues a system call, which the kernel then, translates into a procedure call to the appropriate driver. The driver then starts the I/O and, sits in a tight loop continuously polling the device to see if it is done (usually there is, some bit that indicates that the device is still busy). When the I/O has completed, the, driver puts the data (if any) where they are needed and returns. The operating system then, returns control to the caller. This method is called busy waiting and has the disadvantage, of tying up the CPU polling the device until it is finished., 2. The second method is for the driver to start the device and ask it to give an interrupt when, it is finished. At that point the driver returns. The operating system then blocks the caller, if need be and looks for other work to do. When the controller detects the end of the, transfer, it generates an interrupt to signal completion., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 31

Page 32 :

Prof. Tirup Parmar, 3. The third method for doing I/O makes use of special hardware: a DMA (Direct Memory, Access) chip that can control the flow of bits between memory and some controller, without constant CPU intervention., , Q-25) Explain Interrupt., , , , , , , , , , , , , Interrupts are very important in operating systems, so let us examine the idea more, closely., In Fig. (a) we see a three-step process for I/O. In step 1, the driver tells the controller, what to do by writing into its device registers., The controller then starts the device., When the controller has finished reading or writing the number of bytes it has been told, to transfer, it signals the interrupt controller chip using certain bus lines in step 2., If the interrupt controller is ready to accept the interrupt (which it may not be if it is busy, handling a higher-priority one), it asserts a pin on the CPU chip telling it, in step 3., In step 4, the interrupt controller puts the number of the device on the bus so the CPU, can read it and know which device has just finished (many devices may be running at the, same time)., Once the CPU has decided to take the interrupt, the program counter and PSW are, typically then pushed onto the current stack and the CPU switched into kernel mode., The device number may be used as an index into part of memory to find the address of, the interrupt handler for this device., This part of memory is called the interrupt vector., Once the interrupt handler (part of the driver for the interrupting device) has started, it, removes the stacked program counter and PSW and saves them, then queries the device, to learn its status., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 32

Page 33 :

Prof. Tirup Parmar, , , When the handler is all finished, it returns to the previously running user program to the, first instruction that was not yet executed. These steps are shown in Fig. (b)., , Q-26) Booting the Computer, , , , , , , , , , , , , , , , , , , , , Every PC contains a parentboard (formerly called a motherboard before political, correctness hit the computer industry)., On the parentboard is a program called the system BIOS (Basic Input Output System)., The BIOS contains low-level I/O software, including procedures to read the keyboard,, write to the screen, and do disk I/O, among other things., Nowadays, it is held in a flash RAM, which is nonvolatile but which can be updated by, the operating system when bugs are found in the BIOS., When the computer is booted, the BIOS is started. It first checks to see how much RAM, is installed and whether the keyboard and other basic devices are installed and responding, correctly., It starts out by scanning the PCIe and PCI buses to detect all the devices attached to them., If the devices present are different from when the system was last booted, the new, devices are configured., The BIOS then determines the boot device by trying a list of devices stored in the CMOS, memory., The user can change this list by entering a BIOS configuration program just after booting., Typically, an attempt is made to boot from a CD-ROM (or sometimes USB) drive, if one, is present., If that fails, the system boots from the hard disk., The first sector from the boot device is read into memory and executed., This sector contains a program that normally examines the partition table at the end of the, boot sector to determine which partition is active., Then a secondary boot loader is read in from that partition. This loader reads in the, operating system from the active partition and starts it., The operating system then queries the BIOS to get the configuration information., For each device, it checks to see if it has the device driver., If not, it asks the user to insert a CD-ROM containing the driver (supplied by the device’s, manufacturer) or to download it from the Internet., Once it has all the device drivers, the operating system loads them into the kernel., Then it initializes its tables, creates whatever background processes are needed, and starts, up a login program or GUI., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 33

Page 34 :

Prof. Tirup Parmar, , Chapter 2 Processes and Threads, Q-1) Explain the Process creation & termination., Process creation:, , , Four principal events cause processes to be created:, 1. System initialization., 2. Execution of a process-creation system call by a running process., 3. A user request to create a new process., 4. Initiation of a batch job., , , , , , , , , When an operating system is booted, typically numerous processes are created. Some of, these are foreground processes, that is, processes that interact with (human) users and, perform work for them., For example, one background process may be designed to accept incoming email,, sleeping most of the day but suddenly springing to life when email arrives. Another, background process may be designed to accept incoming requests for Web pages hosted, on that machine, waking up when a request arrives to service the request., In addition to the processes created at boot time, new processes can be created afterward, as well. Creating new processes is particularly useful when the work to be done can, easily be formulated in terms of several related, but otherwise independent interacting, processes., For example, if a large amount of data is being fetched over a network for subsequent, processing, it may be convenient to create one process to fetch the data and put them in a, shared buffer while a second process removes the data items and processes them. On a, multiprocessor, allowing each process to run on a different CPU may also make the job, go faster., , Process Termination:, After a process has been created, it starts running and does whatever its job is., However, nothing lasts forever, not even processes., Sooner or later the new process will terminate, usually due to one of the following, conditions:, 1. Normal exit (voluntary)., 2. Error exit (voluntary)., 3. Fatal error (involuntary)., 4. Killed by another process (involuntary)., , , , Most processes terminate because they hav e done their work. When a compiler has, compiled the program given to it, the compiler executes a system call to tell the operating, system that it is finished. This call is exit in UNIX and ExitProcess in Windows., The second reason for termination is that the process discovers a fatal error., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 34

Page 35 :

Prof. Tirup Parmar, , , , , The third reason for termination is an error caused by the process, often due to a program, bug. Examples include executing an illegal instruction, referencing nonexistent memory,, or dividing by zero. In some systems (e.g., UNIX), a process can tell the operating system, that it wishes to handle certain errors itself, in which case the process is signaled, (interrupted) instead of terminated when one of the errors occurs., The fourth reason a process might terminate is that the process executes a system call, telling the operating system to kill some other process. In UNIX this call is kill. The, corresponding Win32 function is TerminateProcess., , Q-2) Explain the Dining Philosophers Problem. (April 2017)(Nov 2016), , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 35

Page 36 :

Prof. Tirup Parmar, , Q-3) How to implement Threads in the Kernel space and Threads in the User, Space? (April 2017), Implementing Threads in User Space:, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 36

Page 38 :

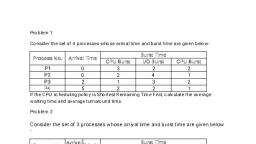

Prof. Tirup Parmar, , Q-5) Consider the following set of processes, with the arrival times and the, CPU burst times given in millisecond. (April 2017), , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 38

Page 40 :

Prof. Tirup Parmar, Q-6) What is a process scheduler? State the characteristics of a good process, scheduler?, OR, What is scheduling? What criteria affect the scheduler's performance?, Scheduling can be defined as a set of policies and mechanisms which controls the order in which, the work to be done is completed. The scheduling program which is a system software concerned, with scheduling is called the scheduler and the algorithm it uses is called the scheduling, algorithm., Various criteria or characteristics that help in designing a good scheduling algorithm are:, 1. CPU Utilization − A scheduling algorithm should be designed so that CPU remains busy, as possible. It should make efficient use of CPU., 2. Throughput − Throughput is the amount of work completed in a unit of time. In other, words throughput is the processes executed to number of jobs completed in a unit of time., The scheduling algorithm must look to maximize the number of jobs processed per time, unit., 3. Response time − Response time is the time taken to start responding to the request. A, scheduler must aim to minimize response time for interactive users., 4. Turnaround time − Turnaround time refers to the time between the moment of, submission of a job/ process and the time of its completion. Thus how long it takes to, execute a process is also an important factor., 5. Waiting time − It is the time a job waits for resource allocation when several jobs are, competing in multiprogramming system. The aim is to minimize the waiting time., 6. Fairness − A good scheduler should make sure that each process gets its fair share of the, CPU., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 40

Page 41 :

Prof. Tirup Parmar, Q-7) Explain time slicing? How its duration affects the overall working of the, system?, Time slicing is a scheduling mechanism/way used in time sharing systems. It is also termed as, Round Robin scheduling. The aim of Round Robin scheduling or time slicing scheduling is to, give all processes an equal opportunity to use CPU. In this type of scheduling, CPU time is, divided into slices that are to be allocated to ready processes. Short processes may be executed, within a single time quantum. Long processes may require several quanta., The Duration of time slice or Quantum, The performance of time slicing policy is heavily dependent on the size/duration of the time, quantum. When the time quantum is very large, the Round Robin policy becomes a FCFS policy., Too short quantum causes too many process/context switches and reduces CPU efficiency. So the, choice of time quanta is a very important design decision. Switching from one process to another, requires a certain amount of time to save and load registers, update various tables and lists etc., Consider, as an example, process switch or context switch takes 5 m sec and time slice duration, be 20 m sec. Thus CPU has to spend 5 m sec on process switching again and again wasting 20%, of CPU time. Let the time slice size be set to say 500 m sec and 10 processes are in the ready, queue. If P1 starts executing for first time slice then P2 will have to wait for 1/2 sec; and waiting, time for other processes will increase. The unlucky last (P10) will have to wait for 5 sec, assuming, that all others use their full time slices. To conclude setting the time slice., 1. Too short will cause too many process switches and will lower CPU efficiency., 2. Setting too long will cause poor response to short interactive processes., 3. A quantum around 100 m sec is usually reasonable., , Q-8) What is Shortest Remaining Time, SRT scheduling?, Shortest Remaining Time, SRT is a preemptive scheduling. In SRT, the process with smallest, runtime to complete (i.e remaining time) is scheduled to run next, including new arrivals. In SRT,, a running process may be preempted by new process with shorter estimated run time. It keeps, track of the elapsed service time of the running process and handles occasional preemption., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 41

Page 42 :

Prof. Tirup Parmar, Consider the following 4 jobs, , Job, , Arrival Time, , Burst Time, , 1, , 0, , 7, , 2, , 1, , 4, , 3, , 3, , 9, , 4, , 4, , 5, , The schedule of the SRT is as follows:, , Job1 is started at time 0, being only job in queue. Job 2 arrives at time 1. The remaining time for, job 1 is larger (6 time units) than the time required by job2 (4 time units), so job 1 is preempted, and job2 is scheduled. The average turnaround time for the above is:, Job, , Turn Around Time, , 1, , 16-0 = 16, , 2, , 5-1 = 4, , 3, , 25-3 = 22, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 42

Page 43 :

Prof. Tirup Parmar, 4, , 10-4 = 6, , 48, , The average turnaround time is 48/4=12 time unit., Advantage, Average turnaround time is less., Disadvantage, Sometime a running process which is being almost completed is preempted because a new job, with very small runtime arrives. It is not really worth doing., , Q-9) What is Highest Response Ratio Next (HRN) Scheduling?, , , HRN is non-preemptive scheduling algorithm., , , , In Shortest Job First scheduling, priority is given to shortest job, which may sometimes, indefinite blocking of longer job., , , , HRN Scheduling is used to correct this disadvantage of SJF., , , , For determining priority, not only the job's service time but the waiting time is also, considered., , , , In this algorithm, dynamic priorities are used instead of fixed priorities., , , , Dynamic priorities in HRN are calculated as, , Priority = (waiting time + service time) / service time., So shorter jobs get preference over longer processes because service time appears in the, denominator., , , Longer jobs that have been waiting for long period are also give favorable treatment, because waiting time is considered in numerator., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 43

Page 44 :

Prof. Tirup Parmar, Q-10) What are the different principles which must be considered while, selection of a scheduling algorithm?, The objective/principle which should be kept in view while selecting a scheduling policy are the, following −, 1. Fairness − All processes should be treated the same. No process should suffer indefinite, postponement., 2. Maximize throughput − Attain maximum throughput. The largest possible number of, processes per unit time should be serviced., 3. Predictability − A given job should run in about the same predictable amount of time and, at about the same cost irrespective of the load on the system., 4. Maximum resource usage − The system resources should be kept busy. Indefinite, postponement should be avoided by enforcing priorities., 5. Controlled Time − There should be control over the different times:, 1. Response time, 2. Turnaround time, 3. Waiting time, The objective should be to minimize above mentioned times., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 44

Page 45 :

Prof. Tirup Parmar, Q-11) Shown below is the workload for 5 jobs arriving at time zero in the order, given below −, Job, , Burst Time, , 1, , 10, , 2, , 29, , 3, , 3, , 4, , 7, , 4, , 12, , Now find out which algorithm among FCFS, SJF And Round Robin with quantum 10, would, give the minimum average time., Answer: For FCFS, the jobs will be executed as:, , Job, , Waiting Time, , 1, , 0, , 2, , 10, , 3, , 39, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 45

Page 46 :

Prof. Tirup Parmar, 4, , 42, , 5, , 49, , 140, , The average waiting time is 140/5=28., For SJF (non-preemptive), the jobs will be executed as:, , Job, , Waiting Time, , 1, , 10, , 2, , 32, , 3, , 0, , 4, , 3, , 5, , 20, , 65, The average waiting time is 65/5=13., For Round Robin, the jobs will be executed as:, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 46

Page 47 :

Prof. Tirup Parmar, Job, , Waiting Time, , 1, , 0, , 2, , 32, , 3, , 20, , 4, , 23, , 5, , 40, , 115, The average waiting time is 115/5=23., Thus SJF gives the minimum average waiting time., , Q-12) Explain pseudo parallelism. Describe the process model that makes, parallelism easier to deal with., All modern computers can do many things at the same time. For Example computer can be, reading from a disk and printing on a printer while running a user program. In a, multiprogramming system, the CPU switches from program to program, running each program, for a fraction of second., Although the CPU is running only one program at any instant of time. As CPU speed is very high, so it can work on several programs in a second. It gives user an illusion of parallelism i.e. several, processes are being processed at the same time. This rapid switching back and forth of the CPU, between programs gives the illusion of parallelism and is termed as pseudo parallelism. As it is, extremely difficult to keep track of multiple, parallel activities, to make parallelism easier to deal, with, the operating system designers have evolved a process model., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 47

Page 48 :

Prof. Tirup Parmar, The process Model, In process model, all the run able software on the computer (including the operating system) is, organized into a sequence of processes. A process is just an executing program and includes the, current values of the program counter, registers and variables. Each process is considered to have, its own virtual CPU. The real CPU switches back and forth from process to process. In order to, track CPU switches from program to program, it is convenient/easier to think about a, collection/number of processes running in (pseudo) parallel. The rapid switching back and forth, is in reality, multiprogramming., One Program Counter Process Switch, , This figure shows multiprogramming of four programs., , Conceptual model of 4 independent sequential processes., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 48

Page 49 :

Prof. Tirup Parmar, , Only one program is active at any moment. The rate at which processes perform computation, might not be uniform. However usually processes are not affected by the relative speeds of, different processes., , Q-13) What are the differences between paging and segmentation?, Answer: Following are the differences between paging and segmentation., Sr. No., , Paging, , Segmentation, , 1, , A page is a physical unit of information., , A segment is a logical unit of, information., , 2, , A page is invisible to the user's program., , A segment is visible to the user's, program., , 3, , A page is of fixed size e.g. 4Kbytes., , A segment is of varying size., , 4, , The page size is determined by the machine, architecture., , A segment size is determined by, the user., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 49

Page 50 :

Prof. Tirup Parmar, 5, , Fragmentation may occur., , Segmentation eliminates, fragmentation., , 6, , Page frames on main memory are required., , No frames are required., , Q-14) Explain the following allocation algorithms., 1. First Fit, 2. Best fit, 3. Worst fit, 4. Buddy's system, 5. Next fit, Answer:, First Fit, In the first fit approach is to allocate the first free partition or hole large enough which can, accommodate the process. It finishes after finding the first suitable free partition., Advantage, Fastest algorithm because it searches as little as possible., Disadvantage, The remaining unused memory areas left after allocation become waste if it is too smaller. Thus, request for larger memory requirement cannot be accomplished., Best Fit, The best fit deals with allocating the smallest free partition which meets the requirement of the, requesting process. This algorithm first searches the entire list of free partitions and considers the, smallest hole that is adequate. It then tries to find a hole which is close to actual process size, needed., Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 50

Page 51 :

Prof. Tirup Parmar, Advantage, Memory utilization is much better than first fit as it searches the smallest free partition first, available., Disadvantage, It is slower and may even tend to fill up memory with tiny useless holes., Worst fit, In worst fit approach is to locate largest available free portion so that the portion left will be big, enough to be useful. It is the reverse of best fit., Advantage, Reduces the rate of production of small gaps., Disadvantage, If a process requiring larger memory arrives at a later stage then it cannot be accommodated as, the largest hole is already split and occupied., Buddy's System, In buddy system, sizes of free blocks are in form of integral power of 2. E.g. 2, 4, 8, 16 etc. Up, to the size of memory. When a free block of size 2k is requested, a free block from the list of free, blocks of size 2k is allocated. If no free block of size 2k is available, the block of next larger size,, 2k+1 is split in two halves called buddies to satisfy the request., Example, Let total memory size be 512KB and let a process P1, requires 70KB to be swapped in. As the, hole lists are only for powers of 2, 128KB will be big enough. Initially no 128KB is there, nor are, blocks 256KB. Thus 512KB block is split into two buddies of 256KB each, one is further split, into two 128KB blocks and one of them is allocated to the process. Next P2 requires 35KB., Rounding 35KB up to a power of 2, a 64KB block is required., So when 128KB block is split into two 64KB buddies. Again a process P3(130KB) will be, adjusted in the whole 256KB. After satisfying the request in this way when such block is free, the, two blocks/buddies can be recombined to form the twice larger original block when it is second, half buddy is also free., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 51

Page 52 :

Prof. Tirup Parmar, Advantage, Buddy system is faster. When a block of size 2k is freed, a hole of 2k memory size is searched to, check if a merge is possible, whereas in other algorithms all the hole list must be searched., Disadvantage, It is often become inefficient in terms of memory utilization. As all requests must be rounded up, to a power of 2, a 35KB process is allocated to 64KB, thus wasting extra 29KB causing internal, fragmentation. There may be holes between the buddies causing external fragmentation., Next fit, Next fit is a modified version of first fit. It begins as first fit to find a free partition. When called, next time it starts searching from where it left off, not from the beginning., , Q-15) When does a page fault occur? Explain various page replacement, strategies/algorithms., Answer: In demand paging memory management technique, if a page demanded for execution, is not present in main memory, then a page fault occurs. To load the page in demand into main, memory, a free page frame is searched in main memory and allocated. If no page frame is free,, memory manager has to free a frame by swapping its contents to secondary storage and thus make, room for the required page. To swap pages, many schemes or strategies are used., Various page replacement Strategies / Algorithms, 1. The Optimal Page Replacement Algorithm − This algorithm replaces the page that will, not be used for the longest period of time. The moment the page fault occurs, some set of, pages are in memory. One of these page will be referenced on the very next instruction., Other pages may not be referenced until 10,100 or perhaps 1000 instructions. This, information can be stored with each page in the PMT(Page Map Table)., P#, , base, , Offset, , 1, , Video Lectures :- https://www.youtube.com/c/TirupParmar, , MISC, , 10, , Page 52

Page 53 :

Prof. Tirup Parmar, 2, , NIL, , 3, , 1000, , ..., , 10, , 100, , 2. The optimal page algorithm simply removes the page with the highest number of such, instructions implying that it will be needed in the most distant future. this algorithm was, introduced long back and is difficult to implement because it requires future knowledge, of the program behavior. However it is possible to implement optimal page replacement, on the second run by using the page reference information collected on the first run., 3. NRU(Not Recently Used) Page Replacement Algorithm - This algorithm requires that, each page have two additional status bits 'R' and 'M' called reference bit and change bit, respectively. The reference bit(R) is automatically set to 1 whenever the page is, referenced. The change bit (M) is set to 1 whenever the page is modified. These bits are, stored in the PMT and are updated on every memory reference. When a page fault occurs,, the memory manager inspects all the pages and divides them into 4 classes based on R, and M bits., o, , Class 1: (0,0) − neither recently used nor modified - the best page to replace., , o, , Class 2: (0,1) − not recently used but modified - the page will need to be written, out before replacement., , o, , Class 3: (1,0) − recently used but clean - probably will be used again soon., , o, , Class 4: (1,1) − recently used and modified - probably will be used again, and write, out will be needed before replacing it., , This algorithm removes a page at random from the lowest numbered non-empty class., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 53

Page 54 :

Prof. Tirup Parmar, Advantages:, o, , It is easy to understand., , o, , It is efficient to implement., , 4. FIFO (First in First out) Page Replacement Algorithm − It is one of the simplest page, replacement algorithm. The oldest page, which has spent the longest time in memory is, chosen and replaced. This algorithm is implemented with the help of FIFO queue to hold, the pages in memory. A page is inserted at the rear end of the queue and is replaced at the, front of the queue., , In the fig., the reference string is 5, 4, 3, 2, 5, 4, 6, 5, 4, 3, 2, 6 and there are 3 frames, empty. The first 3 reference (5, 4, 3) cause page faults and are brought into empty frames., The next reference (2) replaces page 5 because page 5 was loaded first and so on. After 7, page faults, the next reference is 5 and 5 is already in memory so no page fault for this, reference. Similarly for next reference 4. The + marks shows incoming of a page while, circle shows the page chosen for removal., Advantages, o, , FIFO is easy to understand., , o, , It is very easy to implement., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 54

Page 55 :

Prof. Tirup Parmar, Disadvantage, o, , Not always good at performance. It may replace an active page to bring a new one, and thus may cause a page fault of that page immediately., , o, , Another unexpected side effect is the FIFO anomaly or Belady's anomaly. This, anomaly says that the page fault rate may increase as the number of allocated page, frames increases., , e.g. The following figure presents the same page trace but with a larger memory. Here, number of page frame is 4., , Here page faults are 10 instead of 9., 5. LRU(Least Recently Used) Algorithm − The Least Recently used (LRU) algorithm, replaces the page that has not been used for the longest period of time. It is based on the, observation that pages that have not been used for long time will probably remain unused, for the longest time and are to be replaced., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 55

Page 56 :

Prof. Tirup Parmar, , Initially, 3 page frames are empty. The first 3 references (7, 0, 1) cause page faults and, are brought into these empty frames. The next reference (2) replaces page 7. Since next, page reference (0) is already in memory, there is no page fault. Now, for the next reference, (3), LRU replacement sees that, of the three frames in memory, page 1 was used least, recently, and thus is replaced. And thus the process continues., Advantages, o, , LRU page replacement algorithm is quiet efficient., , o, , It does not suffer from Belady's Anomaly., , Disadvantages, o, , Its implementation is not very easy., , o, , Its implementation may require substantial hardware assistance., , Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 56

Page 57 :

Prof. Tirup Parmar, Q-16) Write a short note on Semaphores. (Nov 2016), , , , , , , , , , , , , , , This was the situation in 1965, when E. W. Dijkstra (1965) suggested using an integer, variable to count the number of wakeups saved for future use., In his proposal, a new variable type, which he called a semaphore, was introduced. A, semaphore could have the value 0, indicating that no wakeups were saved, or some, positive value if one or more wakeups were pending., Dijkstra proposed having two operations on semaphores, now usually called down and up, (generalizations of sleep and wakeup, respectively)., The down operation on a semaphore checks to see if the value is greater than 0. If so, it, decrements the value (i.e., uses up one stored wakeup) and just continues. If the value is, 0, the process is put to sleep without completing the down for the moment., Checking the value, changing it, and possibly going to sleep, are all done as a single,, indivisible atomic action., It is guaranteed that once a semaphore operation has started, no other process can access, the semaphore until the operation has completed or blocked., This atomicity is absolutely essential to solving synchronization problems and avoiding, race conditions., Atomic actions, in which a group of related operations are either all performed without, interruption or not performed at all, are extremely important in many other areas of, computer science as well., The up operation increments the value of the semaphore addressed. If one or more, processes were sleeping on that semaphore, unable to complete an earlier down, operation, one of them is chosen by the system (e.g., at random) and is allowed to, complete its down., Thus, after an up on a semaphore with processes sleeping on it, the semaphore will still, be 0, but there will be one fewer process sleeping on it., The operation of incrementing the semaphore and waking up one process is also, indivisible., No process ever blocks doing an up, just as no process ever blocks doing a wakeup in the, earlier model., , Q-17) Explain semaphores and its different types., Dijkestra proposed a significant technique for managing concurrent processes for complex mutual, exclusion problems. He introduced a new synchronization tool called Semaphore., Semaphores are of two types −, 1. Binary semaphore, 2. Counting semaphore, Video Lectures :- https://www.youtube.com/c/TirupParmar, , Page 57

Page 58 :