Page 1 :

_ (W-11 for 4 marks), , , , Q 1 Explain what is regression, Q 2 Explain what do you mean by regration analysis. (W-14 for 4 — bites, , 1.1 Curve Fitting or Regression, , The statistical method which helps us to estimate the unknown, value of one variable from the known value of the related variable, , is called Regression., Usually a mathematical equation is fitted to experimental data by, , plotting the data on the graph pape~ and then passing a straight, line through the data »oints. This method has the obvious, , drawback in the straight line drawn may not be. unique. The, method of least-squares regression is probably the most, systematic procedure to fit a unique curve through given data, points and is widely used in practical computations. It can also be, easily implemented on a digital computer., , The general problem in the curve fitting is to find, if possible,, analytical expression of the form y = f(x), for the functional, relatic ~ship suggested by the given data., , Fitting f curves to a set of numerical data is of considerable, importance in theoretical as well as practical. Theoretical it is, , useful in the study of correlation and regression. In practical |, , statistics it enables us to represent the relationship between two, , variables by simple algebraic expressions. Moreover, it may be, used to estimate the values of one variable which would, , correspond to the specified values of the other variable., , By-Anurag Dwivedi (0721-2566733, 9422916055) 1-1, , CHAPTER1, , BSN Abs

Page 2 :

Curve itt, , Q 1 Explain the method of least square. (S-11/13, Wie, Q 2 Explain the method of least squares regression. (S-12 for 4 i., Q 3 Explain the method of least square to fit a curve. (W-13 for 4 mar, Q 4 Obtain the normal equation for fitting of the straight line by the, , _ principle of least squares. (W-12, 5-13 for 4 marks), Q 5 Explain the method of lest squares in brief., Bie (S-12 for 4 marks asked in unit, &> Method of Least Square or Principle of Least, Squares, , Least square method is very popuiar and efficient method for the, curve fitting. Let (xi, yi), where i= 1, 2, ..., n be the observed set of, values of the variables (x, y)., , - Let ee (1), , be a functional relationship bought between the variables x and y,, Then, , , , , , , , , , €i = yi - f (xi), , which is the difference between the observed values of y and the, ~wlue ef y determined by the functional relation is called the, , xesidus) o¢ error. The principle of least squares states that the sum, of squares of the residuals is minimum, that is, , n, 2, S=>'by,-f(&,)I teehee eta (2), , i=l, is minimum,, Since wé are minimizing the sum of squares of derivations, the, method: is known as least squares method. The fitting of *, relation implies determination of the constants or coefficients 0, equation (1) for the given data such that equation (2) is minimum:, , This is done by applying the theory of maxima and minima,, differentiating equation (2) with respect to the unknown constant, , By-Anurag Dwivedi (0721-2566733, 9422916055)

Page 3 :

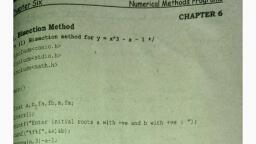

Fee ____ Curve Fittingof the equation y = f (x) and putting these equations to zero. If, there are k constants, then we shall have k equations. The, , _/equations can be solved to get the values of k unknown constants., These equations are known as normal equations., , The procedure for getting these normal equations and _ the, , corresponding solutions will depend upon the nature of the, function y = Fy, , , , Q 1 Explain term linear regression in detail (W-12/13 for 4 marks), Q 2 Explain what do you mean by linear regression. (5-14 for 4 marks), Q. 3 Obtain the normal equations for fitting the straight line., , , , (S-14 for 4 marks), Q 4 What is least square techniques to fit a straight line? When the Gne is, said to be best fit? (W-14 for 4 marks), , , , 4.3 Linear Regression, , The simplest example of a least-squares approximation is fitting a, straight line to a set of paired observations: (x1, yi), (x2, ya) ---------, nan-- (Xn, Yn). The mathematical expression for the straight line is, : oma Dux 6 ~ Saget os eens (1), , where a and b are coefficients representing the intercept and the, , slope, respectively and e is the error or residual between the model, and the observations, which can be represented by rearranging, equation (1) as,, , e=y-a-bx, , Thus, the error or residual, is the difference between the true value, , of y and the approximate value a + b x, predicted by the linear, equation., , The strategy for best fit is to minimize the sum of squares of ~, , the residuals between the measured y and the y calculated with, the linear model., , By-Anurag Dwivedi (0721-2566733, 9422916055) 1-3

Page 5 :

Error, @/, Point(x;, ¥), , , , ., , Statistical Assumptions used in Linear Regression ge, Some statistical assumptions are needed in the linear least square, , procedure, those are, 1. Each x has a fixed value; it is not random and is known, without error. :, , 2. The y values are independent random variables and all are, have the same variance., , 3. The y values for a given x must be normally distributed., , These assumptions are relevant to the proper derivation and use of, regression. For example, the first assumption means that, , 1. The x values must be error free., 2. The regression of y versus x is not the same as x versus y., , By-Anurag Dwivedi (0721-2566733, 9422916055). 1-5